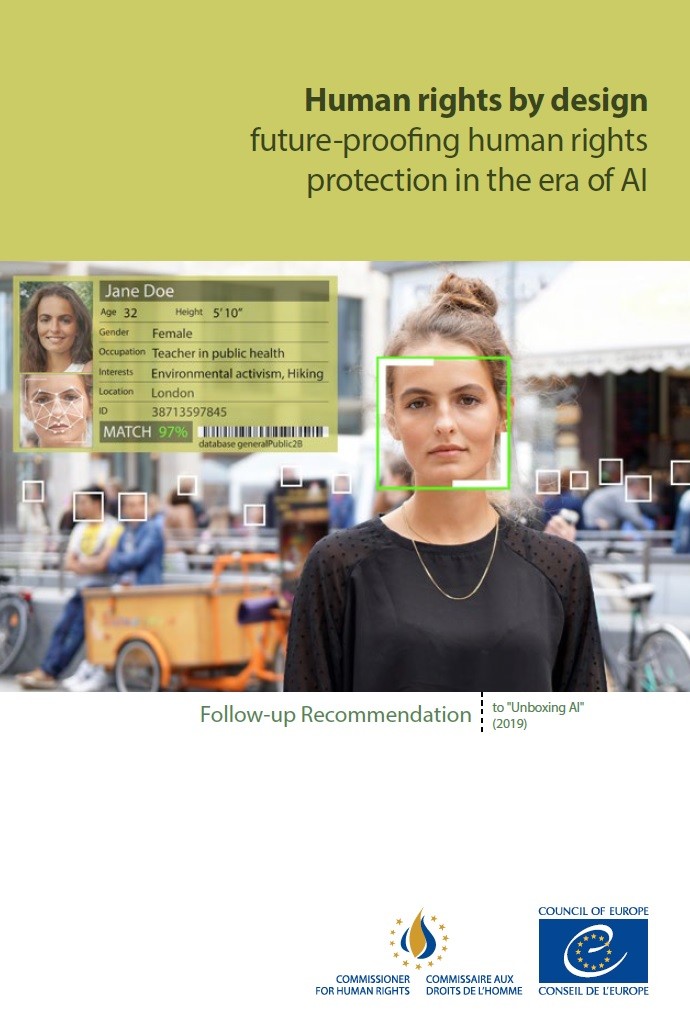

Follow-up recommendation to the recommendation "Unboxing Artificial Intelligence: 10 steps to protect human rights"The pace of technological development is picking up speed and Artificial Intelligence (AI) is playing an ever-growing role in all aspects of our lives, including public administration. Member states of the Council of Europe must keep up with the increasing reliance on automated processes and machine-learning and ensure that they safeguard the human rights of everyone in society in this fast-evolving context.

This pressing need prompted the Council of Europe Commissioner for Human Rights, Dunja Mijatović, in 2019 to publish the Recommendation “Unboxing artificial intelligence: 10 steps to protect Human Rights”, which provides guidance to member states on the main principles that should be followed to prevent or mitigate the negative impacts of AI systems on human rights.

Member states have acted on some of the key areas identified in the 2019 Recommendation, but the overall approach has not been consistent. Human rights-centred regulation of AI systems is still absent on many fronts and public authorities tend to get involved too late – and move forward at insufficient speed – for their engagement to be truly meaningful. While human rights norms and safeguards are technology-neutral and applicable to all contexts, including those involving AI systems, their enforcement is often lacking and oversight sporadic.

This Follow-up Recommendation reviews the challenges faced by member states in implementing the 2019 Recommendation, such as the adequacy of assessment of human rights risks and impacts, the establishment of stronger transparency guarantees, and the requirement of independent oversight.

INTRODUCTION

CHAPTER 1 - HUMAN RIGHTS IMPACT ASSESSMENTS

CHAPTER 2 - HUMAN RIGHTS STANDARDS IN THE PRIVATE SECTOR

CHAPTER 3 - INFORMATION AND TRANSPARENCY

CHAPTER 4 - PUBLIC CONSULTATIONS

CHAPTER 5 - PROMOTION OF AI LITERACY

CHAPTER 6 - INDEPENDENT OVERSIGHT

CHAPTER 7 - EFFECTIVE REMEDIES

CONCLUDING OBSERVATIONS AND RECOMMENDATIONS